In this Machine Learning Simulation, participants are data-driven decision-makers, applying real-world ML models to solve strategic business and finance problems. They explore how machine learning impacts finance, and business decisions.

Supervised Learning Models: Linear regression, decision trees, random forests, classification algorithms

Model Selection & Evaluation: Accuracy, precision, recall, AUC, and overfitting

Interpretability vs. Performance: Trade-offs in stakeholder trust and model complexity

Bias and Fairness: Recognizing and mitigating ethical concerns

Data Quality: Handling missing values, outliers, and noisy inputs

ML in Business Contexts: Applying models to real problems in marketing, finance, HR, and operations

Cross-Functional Communication: Translating model outputs for decision-makers

Model Monitoring: How model performance degrades or changes over time

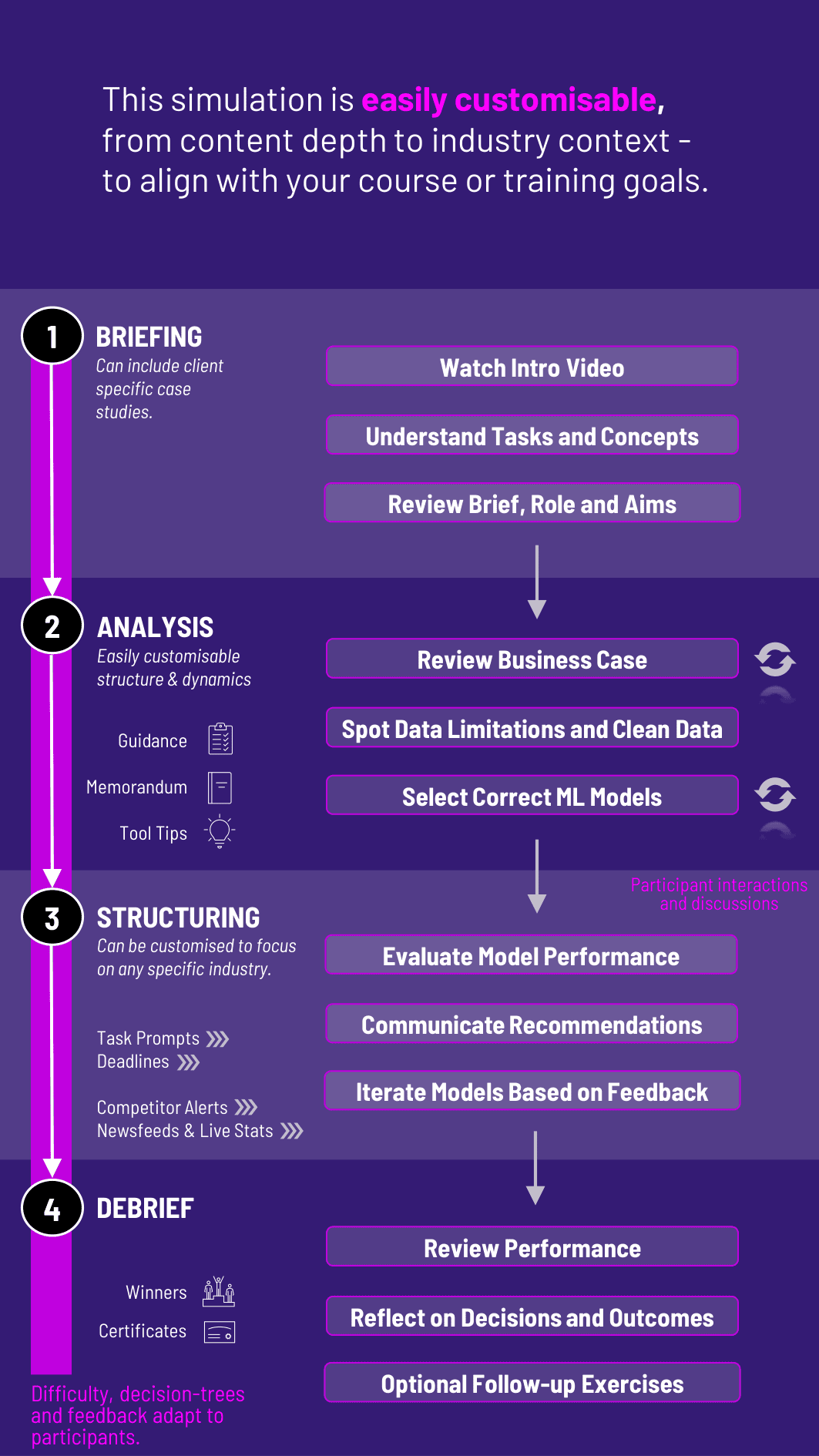

Receive a business case (e.g., predict customer churn, score loan applications, recommend products)

Review available datasets, spot data limitations, and clean the data

Select the right ML model(s) for the task

Evaluate model performance across metrics like accuracy, F1 score, or confusion matrix

Balance model complexity with interpretability, and choose what to deploy

Communicate recommendations to both business and technical teams

Receive feedback on the results and iterate based on model drift or stakeholder feedback

By the end of the simulation, participants will be more confident in:

Understanding key machine learning models and their strengths/weaknesses

Making strategic decisions using predictive analytics

Communicating ML results clearly to non-technical stakeholders

Applying machine learning to solve business problems, not just theoretical exercises

Navigating the ethics and risks of automated decision-making

Collaborating across technical and business roles

Understanding how to test, deploy, and monitor models over time

Becoming data-literate decision-makers in any role

The simulation is ideal for business students, product managers, consultants, and finance professionals looking to upskill in applied data science. Its flexible structure ensures that these objectives can be calibrated to match the depth, duration, and focus areas of each program.

1. Receive a Business Problem Each round presents a real-world decision challenge where ML can help - such as reducing churn, increasing credit approvals, or improving customer targeting.

2. Explore the Dataset Participants review a simplified dataset, checking for data quality, identifying variables, and understanding the context.

3. Choose and Train a Model Participants select one or more ML models, compare outcomes, and avoid common pitfalls like overfitting.

4. Deploy and Act They make business decisions based on model insights, then justify and communicate those decisions to key stakeholders.

5. Receive Feedback and Iterate They learn from simulation results - like missed targets, stakeholder concerns, or model drift—and refine their approach.

Do I need to know Python or R? No. The simulation abstracts the coding so participants can focus on interpretation, strategy, and business decisions.

Can this be used for technical audiences too? Yes. You can add advanced model evaluation, data cleaning, or even integration with notebooks if needed.

What kinds of models are included? Logistic regression, decision trees, random forests, and basic clustering methods, with pre-calculated results.

Does it include bias or ethical issues? Yes. Scenarios include model bias, fairness trade-offs, and stakeholder concerns.

Is this suitable for MBAs or business analysts? Absolutely. It’s designed for those who need to understand and lead with data - not just write the code.

Can the simulation be tailored for industry use cases? Yes. You can choose finance, retail, healthcare, HR, or supply chain problems.

What outputs do participants receive? Model performance summaries, business impact reports, and stakeholder response simulations.

Can it be run in teams? Yes. Teams can take on roles like data analyst, business leader, or compliance officer.

How long does it run? Anywhere from a 2-hour session to a multi-day workshop or full course module.

Is performance measured? Yes. Based on model choices, communication clarity, business results, and stakeholder alignment.

Accuracy and strategic relevance of model selection

Interpretation and explanation of model performance

Ethical reasoning and fairness consideration

Quality of business decisions based on ML insights

Clarity of communication to non-technical stakeholders

Responsiveness to feedback or changing data dynamics

Assessment formats include in-simulation metrics, peer and instructor feedback, and optional debrief presentations or memos. This flexibility allows the simulation to be easily integrated by professors as graded courses at universities and by HR at assessment centres at companies.

Join this 20-minute webinar, followed by a Q&A session, to immerse yourself in the simulation.

or

Book a 15-minute Zoom demo with one of our experts to explore how the simulation can benefit you.